What Is Zero Trust Security and Why It Matters

A comprehensive guide to the framework redefining cybersecurity.

Zero Trust Outlook ↓ Executive Summary ↓ Model Evolution ↓ What Is Zero Trust? ↓ Principles ↓ The Basics ↓ Misconceptions ↓ Building Trust ↓ Zero Trust and Identity ↓ Zero Trust Data Security ↓ Control Categories ↓ What Is ZTNA? ↓ New Perimeter ↓ Architecture ↓ Perimeter Setup ↓ Microsegmentation ↓ Application Perimeter ↓ Perimeter Strategy ↓ Common Challenges ↓ Cloud Use ↓ DevOps Challenges ↓ Emerging Technologies ↓ OT Security ↓ Government Interest ↓ SASE Role ↓ Tech Stack ↓ Implementation

Zero Trust Outlook

Unlike other buzzwords, “Zero Trust” has the potential to be one of the most transformative paradigms in cybersecurity—so much so that US federal agencies and enterprises are moving to rapidly adopt Zero Trust.

But why?

Because it encourages hypervigilance to safeguard their interests. However, it’s not exclusive to governments and state secrets. Zero Trust has the potential to benefit organizations of any size, big or small.

Executive Summary

Zero Trust is a cybersecurity framework that challenges the traditional notion of trusting users and devices inside a network perimeter. It focuses on continuously verifying every access request regardless of origin, minimizing risk by enforcing strict access controls, and assuming that breaches will happen.

Key benefits of Zero Trust include:

- Stronger protection of sensitive data and critical resources.

- Reduced risk of lateral movement by attackers inside the network.

- Simplified compliance with modern regulatory requirements.

- Increased operational visibility and control over user activity.

Successful Zero Trust implementation requires a strategic approach, including identity verification, microsegmentation, and continuous monitoring.

Related Resources

Model Evolution

Before Zero Trust solutions, cybersecurity relied heavily on strong perimeter defenses—firewalls, VPNs, and network segmentation—to keep threats out. This traditional “castle-and-moat” model assumed that users and devices inside the network were trustworthy.

Over time, this approach proved insufficient. The rise of remote work, cloud adoption, and sophisticated attacks exposed the limitations of perimeter security. Zero Trust emerged as a response to these challenges by advocating for “never trust, always verify.”

This evolutionary timeline highlights key moments:

- Early 2000s: Perimeter-based defenses dominate security strategies.

- Mid-2010s: The rise of cloud computing and mobile workforces weakens perimeter boundaries.

- 2014: Forrester Research coins the term “Zero Trust.”

- 2020+: Adoption accelerates due to remote work and high-profile breaches.

What Is Zero Trust?

Before Zero Trust solutions, organizations spent a lot of effort to maintain strong perimeter defenses, investing in network firewalls, intrusion detection, network access control, and other tools to try and keep hackers out of their networks.

After two decades, the verdict on legacy network security is in: It hasn't delivered results. We know this because of the sheer volume of news reports about organizations falling victim to cyberattacks. Between phishing, social engineering, drive-by downloads, exposed endpoints, and zero-day vulnerabilities, motivated hackers have their pick of ways to access a modern enterprise.

This fact was highlighted by Okta’s The State of Zero Trust Security 2023 report, indicating that 61 percent of all organizations have implemented Zero Trust, with the remaining 35 percent eyeing an update of their own.

"Organizations around the world are taking tangible steps toward Zero Trust. Identity is critical for keeping these complex, global workforces collaborating securely and productively." — David Bradbury, Chief Security Officer, Okta

Zero Trust can help keep resources protected, even when hackers have free rein in your network.

Related Resources

Principles

Continuous practices ensure resilient, scalable security.

At its core, Zero Trust is built on these three principles:

1. Verify Explicitly

Always authenticate and authorize access based on real-time data points such as user identity, device health, location, and behavior. It’s not enough to simply log in once; access decisions should be dynamic and risk-aware, adapting to context and continuously reassessing trust.

2. Use Least Privilege Access

Only grant users and devices the minimum level of access required to perform their tasks. This minimizes the potential impact of a compromised account or system and limits lateral movement across networks. It’s a key step toward reducing your attack surface.

3. Assume Breach

Operate as if an attacker is already inside your network. Design your architecture to contain threats early and reduce dwell time. Continuous monitoring, anomaly detection, and automated response mechanisms help detect and isolate malicious activity before it causes damage.

Related Resources

The Basics

Build trust through verification.

All devices and users trying to access a protected resource must be authenticated and authorized:

- Are they who they claim to be?

- Are they allowed to access the resource?

With Zero Trust in place, hackers trying to access a protected resource will either fail the authentication or the authorization check.

Simple concept, right? There’s a bit more to it.

Related Resources

Misconceptions

Despite its growing popularity, Zero Trust is often misunderstood. Here are some common misconceptions:

"Zero Trust means no one is ever trusted."

False. Zero Trust isn't about distrust—it’s about verifying continuously. Trust is established through authentication, authorization, and contextual analysis. It is not assumed based on network location or previous access.

"Zero Trust is only for big enterprises or government agencies."

Although larger organizations have more resources to implement at scale, Zero Trust principles can benefit businesses of all sizes. In fact, smaller organizations may be able to adopt the model more quickly due to fewer legacy systems and simpler infrastructures.

"Zero Trust replaces all existing security tools."

False. Zero Trust is not a rip-and-replace strategy. Instead, it builds on and enhances your existing security stack, helping you gain more value from current investments through improved integration and visibility.

"Zero Trust is a single product or solution."

Zero Trust isn’t a tool you can buy off the shelf—it’s a strategic framework. It requires a coordinated approach involving identity management, endpoint security, access control, monitoring, automation, and more. It's about aligning people, processes, and technologies around a unified goal: minimizing implicit trust and reducing risk.

Related Resources

Building Trust

For Zero Trust to work, you need appropriate tests to verify authentication and authorization.

Authentication technologies range from traditional passwords to advanced approaches such as biometrics, passwordless authentication, and multi-factor authentication (MFA). These technologies improve security by verifying not only “something you know” but also “something you have” or “something you are.” Adaptive authentication techniques may also evaluate contextual factors such as device health, location, and behavior patterns to dynamically adjust authentication requirements.

These are checked at sign-on and continuously verified throughout the user's session.

- Example: Assess the user’s location. An executive logging in with the right password but from an unexpected country requires greater scrutiny.

From here, define access policies that use those attributes to determine who can access what, from where, and how. Authorization may vary based on the attributes.

- Example: A normal user might be restricted to accessing the web interface of an internal server, whereas an IT admin may be able to log in to that server via Secure Shell (SSH) protocol.

Access policies don’t only apply to users.

All accesses to the protected resource, including any server-to-server traffic, should be covered by a policy.

Similarly, Internet of Things (IoT) devices—which often operate without human interaction—must be included in your Zero Trust framework. These devices should be identified and authenticated based on their unique characteristics (e.g., device ID, firmware version, behavior patterns) and have their access tightly governed by context-aware policies. This ensures only legitimate, authorized IoT devices can interact with critical systems, minimizing the risk posed by compromised or rogue endpoints.

Zero Trust solutions provide the flexibility to choose suitable attributes and policy definitions, blocking hackers from accessing protected resources while maintaining existing application communications and giving authorized users a streamlined experience.

Related Resources

Zero Trust and Identity

There are many open services on an application server.

If you “assume breach” and want to protect against hackers who may already be in your network, every single network packet must go through authentication and authorization.

- Example: IT admins need to be able to log in to manage the server. Stealing an administrator credential offers a way to obtain resources without going through the front door that bypasses the application.

- Example: Without credentials, attackers can gain access by sending a carefully crafted packet to a vulnerable service.

Identity is necessary, but insufficient, to achieve Zero Trust.

Related Resources

Zero Trust Data Security

A resource protected by Zero Trust is defended in several ways. For one, all unauthorized packets are blocked, effectively hiding the protected resource on the internal network. This resource cloaking has many benefits, including:

- Preventing hackers from discovering and probing the protected resource.

- Making it difficult to uncover corporate practices and technology stacks.

- Blocking access from unauthorized clients, including malware, hacker toolkits, and ransomware.

Zero Trust policies aren't limited to access to a resource. They can also work to control access from a protected resource—for example, to prevent software on protected resources from making unauthorized lateral access to other machines in the network or on the internet. This secures the data on the resource by:

- Guarding against privilege abuse by authorized users.

- Blocking data leaks.

Zentera customers often restrict access to a resource to known good virtual desktop infrastructure (VDI) clients, preventing VNC- or RDP-based attacks. They enforce copy/paste controls to block direct exfiltration, and then use Zero Trust policies to prevent direct exfiltration to the Internet or other lateral staging servers.

Another way Zero Trust can secure resources is by ensuring all traffic to and from the resource travels in an encrypted tunnel. Zentera's CoIP® Platform's AppLink capabilities can encrypt local area network (LAN) traffic to prevent packet sniffing and spoofing. Although LAN encryption isn’t explicitly required by Zero Trust standards, such as NIST SP 800-207, it is mandated by US government policy OMB M-22-09, ensuring compliance with federal encryption standards like AES-256 and TLS 1.3 for data-in-transit protection.

In the event of a breach attempt or suspicious activity, Zero Trust enables rapid containment and response. Session-layer policy enforcement allows anomalous behavior—such as lateral movement or privilege escalation—to trigger automated actions or alerts. Zentera’s infrastructure can isolate affected resources without impacting the broader network, while audit trails and telemetry support forensics and ongoing policy refinement.

Control Categories

Zero Trust is a practical security model with a set of technical controls that enforce least-privilege access and continuous verification across your infrastructure. These controls work together to secure every interaction between users, devices, applications, and data.

Here’s how those controls break down across four key categories:

Network Controls

Network controls filter and inspect traffic to ensure only authorized connections are allowed.

- Microsegmentation: Limits lateral movement by restricting communication between workloads unless explicitly permitted.

- Software-defined perimeter (SDP): Hides applications from unauthorized users and enforces policy-based access through gateways or inline enforcement points.

- Encrypted tunnels (e.g., AppLink): Protects traffic between trusted endpoints—even on internal networks—from packet sniffing and spoofing.

- Policy-based routing: Routes traffic only through authorized paths based on policy, avoiding exposure to untrusted zones.

Identity Controls

Every access request is evaluated in real-time based on user and device identity, not assumed trust.

- Multi-Factor Authentication (MFA): Adds additional layers of verification before granting access to resources.

- Single Sign-On (SSO) with Identity Federation: Centralizes identity while enabling seamless, policy-driven access across systems.

- Role-Based Access Control (RBAC): Grants permissions based on job roles and responsibilities, limiting overprivileged access.

- Device Identity and Posture Checking: Evaluates device health, OS version, patch level, and other attributes before allowing access.

Endpoint Controls

Endpoints are where users interact with data and where attackers often gain a foothold. Zero Trust ensures these devices are controlled and monitored.

- Device authentication and attestation: Confirms the device identity and security posture before granting access.

- Copy/paste and file transfer restrictions: Prevents data exfiltration through user actions such as clipboard or file sharing.

- Client software integrity checks: Ensures client applications (such as VDI clients) are secure and not tampered with.

- Contextual access policies: Adjusts access rights dynamically based on endpoint state and location.

Data Controls

Ultimately, Zero Trust aims to protect data—both in motion and at rest.

- Access control lists (ACLs) at the application layer: Prevents unauthorized access to sensitive data within applications.

- Data loss prevention (DLP) rules: Detects and blocks unauthorized sharing, copying, or transmission of sensitive data.

- Encryption at rest and in transit: Ensures data is unreadable to unauthorized users or systems.

- Activity logging and auditing: Captures all user and system interactions for compliance, detection, and incident response.

What Is ZTNA?

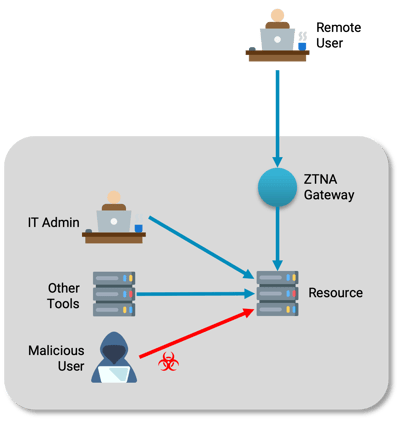

Zero Trust Network Access (ZTNA) does not grant network access.

ZTNA is most often used to provide users with access limited to specific resources.

Think of ZTNA as a replacement for a user virtual private network (VPN), but without granting access to the network. While VPNs create an encrypted tunnel to the entire corporate network, they inherently grant broad network access once connected, which conflicts with Zero Trust’s principle of least privilege. VPNs authenticate users but do not enforce granular access controls based on device posture or specific resource needs, increasing the risk of lateral movement if credentials or devices are compromised.

In contrast, ZTNA limits access strictly to authorized applications or services, continuously verifying user identity and device health, making it a more precise and secure fit for a Zero Trust architecture. It has much more granular filters based on the identity of the user, device, and client software. ZTNA solutions may deploy as a gateway in front of protected resources or as an agent installed on the protected resource, and Zentera offers both models.

Most ZTNA solutions only support remote access ("north-south") use cases, but this means the security controls are only effective when the user is remote. Because Zero Trust is about protecting assets even when the networks are compromised, it’s critical that the same levels of authentication be used inside the enterprise ("east-west"). Without east-west coverage, you can’t filter threats already inside the network and fulfill Zero Trust. Zentera's ZTNA solutions work across the compass, providing users with a consistent access method, both north-south and east-west, to ensure protection whether users are at home or in the office.

ZTNA solutions are often used to enable remote access ("north-south"), but for Zero Trust, it's critical that ZTNA also be used inside the enterprise ("east-west"). A ZTNA solution that doesn't cover the east-west direction doesn't address the basic Zero Trust motivation—filter the threats that are already inside the network. Zentera's ZTNA solutions work for both north-south and east-west accesses, providing users with a consistent access method regardless of whether they are working from home or in the office.

Securing a Remote Workforce Beyond Basic ZTNA

Basic ZTNA is a strong start for secure remote access, but today’s distributed workforces require more than just secure connections. Securing a remote workforce means ensuring consistent protection across users, devices, and locations—not just for initial access, but throughout the session. Zentera goes beyond basic ZTNA by layering in microsegmentation, application cloaking, and real-time policy enforcement to block lateral movement and prevent data leakage.

For example, a contractor accessing a development environment from home shouldn’t have the same privileges or visibility as a full-time engineer in the office. Zentera’s advanced controls make this possible, dynamically adjusting access based on role, device posture, and contextual risk. And with strong east-west enforcement, even internal threats—such as compromised endpoints—are contained before they can move laterally or impact other critical systems.

In short, ZTNA is a key piece of Zero Trust, but real security for the hybrid workforce requires enforcing Zero Trust principles across the entire environment, no matter where users or workloads reside.

New Perimeter

One of the easiest mistakes to make when architecting Zero Trust is to focus on "front-door" access. Policies you can't enforce are worthless.

In addition to the web "front door," there may be many other open paths:

- SSH and PowerShell for IT administrators

- Application performance monitoring

- Logging tools

If threats in the network can bypass the gateway—via the application server or the databases it depends on—it isn’t very useful. To protect the resource, you need a new perimeter around the resource and its dependencies, so all access to the resources goes through policy checks.

To guide this complex process, many organizations use Zero Trust maturity models—frameworks that help measure how thoroughly Zero Trust principles are embedded across people, processes, and technology. These models assess multiple dimensions, such as identity and access management, network segmentation, device security, and continuous monitoring. By benchmarking your organization’s maturity level, you can prioritize areas for improvement and track progress toward a fully enforced Zero Trust architecture.

With this, you can safely say you’ve achieved the goals of Zero Trust.

ZTNA secures remote access, but resources that are open in the network are still exposed to threats in the network.

Related Resources

Architecture

The combination of access policy control with ZTNA and a new perimeter follows the guidance in NIST SP 800-207 to the letter.

This new perimeter is an "implicit trust zone." Servers and devices within the zone are trusted and able to bypass policy checks to access each other. All accesses outside the implicit trust zone have the policy enforced at a policy enforcement point (PEP).

The new perimeter must force all accesses to go through the PEP. Think of this as an application perimeter, containing an application (e.g., web servers) and its dependencies (e.g., backend databases).

The NIST Zero Trust Architecture spec provides a helpful lens through which to evaluate what is and what is not Zero Trust. Industry groups like NIST, CSA, OWASP, and TCG are developing standards and best practices to help organizations adopt Zero Trust more consistently and effectively across cloud, application, and hardware environments. These efforts promote alignment and maturity industry-wide.

It’s easy to see that the core components of Secure Access Service Edge (SASE)—such as secure web gateway (SWG)—are actually solving other problems, such as protecting users against malicious web content.

For more on NIST SP 800-207, check out our NIST SP800-207 explainer article.

Zero Trust protects resources or assets; everything behind the PEP is implicitly trusted.

Related Resources

Perimeter Setup

You can create an application perimeter using legacy technology, put the protected resource and its dependencies in a separate VLAN, or change the network topology and put the resource and its dependencies behind a firewall. But as NIST SP 800-207 points out, the management overhead and disruption to existing applications make these options less than ideal.

Microsegmentation and software-defined perimeter can also help you create an application perimeter.

During implementation, many organizations run zero trust controls alongside existing legacy security models to minimize disruption and reduce risk. This parallel approach allows for gradual segmentation of applications and resources, careful testing of policies, and adjustment based on real-world use and feedback. By layering zero trust principles on top of traditional perimeters initially, you can maintain business continuity while steadily reducing reliance on less dynamic security controls. Establishing clear visibility, monitoring, and incremental enforcement will help ensure a smooth transition and build confidence in the new model.

Related Resources

Microsegmentation

Microsegmentation minimizes the attack surface in a data center by filtering unused ports between pairs of machines, leaving only "authorized" communications.

Most microsegmentation solutions focus on data-center-scale challenges—deploy everywhere, make traffic between servers visible, and then filter unused ports to prevent “surprises.” The filtering can be implemented by:

- Programming the network (switch/router ACLs)

- Host firewalls (host-based microsegmentation)

- Programming the network depends on the data center’s topology, whereas the host-based model applies to north-south and east-west traffic and is more flexible.

Microsegmentation tools can be used to create a Zero Trust perimeter, but are not optimized for creating a network zone. As a result, many customers stop at the visibility stage and never turn on filtering. Also, because microsegmentation is targeted at the data-center scale, vendors may be challenged to scale down to individual resources or applications, both from operational and business perspectives. As a result, the difficulty of creating and maintaining a perimeter can vary widely from vendor to vendor.

Related Resources

Application Perimeter

An application perimeter is defined by and can be reconfigured using software to control what services and users can access an application. Compared to microsegmentation deployed across the data center, an application perimeter can be deployed to protect a single application server.

Zentera's CoIP Platform implements this concept using an Virtual Chamber—or a software-defined perimeter that enables administrators to create perimeters around groups of servers, partition them into smaller groups, or merge them as needed. This makes it possible to create an application perimeter with a resource and its dependencies, but does not disrupt it.

Perimeter Strategy

Even decades-old infrastructure supports the VLAN method of limiting the NIST SP 800-207 implicit trust zone, whereas microsegmentation and software-defined perimeter approaches can run on any IP network. But not all approaches are equal, and you must consider the operational costs of VLAN, microsegmentation, and application perimeter.

VLAN

Enterprises have mixed technology stacks, complete with different vendors and operational teams responsible for each site. Coordinating VLAN deployment and maintenance across the entire enterprise infrastructure can be challenging. Furthermore, cloud infrastructure introduces new concepts, such as virtual private clouds (VPCs) and virtual networks (VNETs). Building an application perimeter using existing infrastructure is possible, but it can end up costing far more in planning, execution, and maintenance.

Microsegmentation

Depending on the implementation, microsegmentation can also suffer from dependencies on the underlying infrastructure. Some vendors use ACLs to program rules into the infrastructure, and these tools may introduce dependencies on the network infrastructure stack or the API versions used in the environment.

Application Perimeter

Application perimeters are immune to infrastructure dependencies, providing a consistent configuration and deployment model across the entire estate.

Adopting Zero Trust doesn’t mean replacing all legacy systems immediately. Many organizations integrate Zero Trust principles alongside existing security controls to minimize disruption. Overlay solutions like Zentera’s CoIP Platform enable creating application perimeters without changing the underlying network or endpoints, enforcing identity-based policies in parallel with legacy firewalls and VLANs. For systems where agents can’t be installed—such as OT or legacy servers—Zero Trust DMZ appliances can enforce access at the network edge. This incremental approach leverages existing segmentation as a foundation while gradually enhancing security with Zero Trust controls, making the transition smoother and more manageable.

Zentera’s CoIP Platform achieves this in two ways:

- zLink software agent, which supports virtualized and bare-metal servers anywhere.

- Zero Trust Gatekeeper (ZTG), which deploys in line with protected resources to create an application perimeter called a Zero Trust demilitarized zone (DMZ).

Vendor Selection Criteria for Zero Trust Technology

When evaluating Zero Trust technology providers, organizations should prioritize solutions that offer operational simplicity, infrastructure-agnostic deployment, and seamless integration with existing security tools. Key considerations include:

- Scalability and flexibility: Can the solution scale across diverse environments (on-premises, cloud, hybrid) without requiring extensive reconfiguration or hardware changes?

- Infrastructure independence: Does the solution operate without heavy dependencies on specific network hardware, API versions, or vendor lock-in?

- Ease of management: How intuitive is the platform’s policy creation, deployment, and ongoing management for distributed teams?

- Incremental adoption: Does the vendor support phased implementation, allowing coexistence with legacy security controls to minimize disruption?

- Agent support and compatibility: Are there lightweight agents for various endpoints, including virtualized and bare-metal servers, and alternatives for environments where agents can’t be installed?

- Strong identity and access controls: Does the solution enforce strict identity-based policies aligned with NIST Zero Trust principles?

Related Resources

Common Challenges

Here are some of the frequent challenges organizations face during Zero Trust adoption, and considerations to keep in mind:

- Complex legacy infrastructure: Difficulty integrating Zero Trust with existing network architectures and vendor ecosystems.

- Operational overhead: Managing new policies, microsegmentation rules, and enforcement points can increase administrative complexity.

- Visibility gaps: Lack of comprehensive network and application visibility can make defining and enforcing policies tricky.

- User experience concerns: Balancing strict security with seamless user access often requires fine-tuning and continuous monitoring.

- East-West traffic enforcement: Many solutions focus on north-south traffic, leaving internal east-west traffic unprotected.

- Cloud and hybrid complexity: Managing consistent Zero Trust policies across on-premises, cloud, and hybrid environments.

- Cultural and organizational resistance: Shifting mindset from perimeter-based trust to continuous verification demands stakeholder buy-in.

Related Resources

Cloud Use

As cloud computing continues to grow in importance, it’s critical to leverage the Zero Trust paradigm. Cloud flexibility and the need for end users and DevOps to access cloud applications from anywhere make it difficult to define a traditional perimeter. As many cloud infrastructures connect back to on-premises environments in a hybrid deployment, misconfigurations can open paths for attacks to flow back into the enterprise.

In truth, cloud infrastructure is not that different from legacy network infrastructure. Cloud providers offer VPN services, but users can only connect to one VPN at a time, forcing administrators to make difficult routing choices.

VPCs are fast to deploy and easy to configure, but difficult to reconfigure. Partitioning a VPC usually requires server migration. An application perimeter can be continually modified to improve security, whereas a VPC is static.

An overlay Zero Trust architecture, such as the CoIP Platform, can help solve these challenges. The CoIP Access Platform AppLink creates an Application Network that enables any-to-any cross-domain ZTNA. It allows for a single set of identity-based policies to define connectivity, eliminating the need to change them when a workload is migrated from on-prem to the cloud.

Many organizations operate in hybrid environments, combining on-premises infrastructure with multiple cloud providers. Successful Zero Trust implementation in such setups requires strategies that ensure consistent policy enforcement and visibility across all environments. This includes deploying unified identity and access management solutions that span both cloud and on-prem resources, using automation to reduce configuration errors, and leveraging overlay technologies like Zentera’s CoIP Platform to create seamless, secure application perimeters regardless of workload location.

Hybrid models benefit from phased rollouts, prioritizing critical applications and integrating legacy controls to maintain operational continuity while migrating to Zero Trust architectures.

DevOps Challenges

Modern development environments present unique security challenges that differ from traditional IT or production systems. High rates of change, frequent automation, dynamic infrastructure (such as containers and ephemeral environments), and broad access to sensitive systems and data all complicate the enforcement of Zero Trust principles.

Zero Trust in DevOps must account for:

- Automated processes and service accounts: Many build and deployment tools require privileged access to source code, environments, or secrets. Without tight policy control, compromised service accounts can offer attackers a fast path to lateral movement or data exfiltration.

- Ephemeral resources: Resources such as containers or test environments may only exist for minutes. Policies must apply dynamically to support secure access without manual reconfiguration.

- CI/CD pipelines: These pipelines often have open integrations with third-party tools and environments, which can introduce security gaps. Zero Trust should be enforced end-to-end, not just at user access points.

- Developer experience: Security controls should not disrupt rapid iteration. A Zero Trust approach that enables secure-by-default environments—with minimal friction for developers—is key to adoption.

Related Resources

Emerging Technologies

As organizations continue to adopt Zero Trust principles, emerging technologies like artificial intelligence (AI), blockchain, and advanced analytics are playing an increasingly critical role in strengthening security frameworks.

Artificial Intelligence and Machine Learning

AI and machine learning enhance Zero Trust by enabling dynamic, real-time analysis of user behavior and network activity. These technologies help identify anomalies that might indicate insider threats or compromised credentials, allowing automated responses such as adaptive authentication or immediate access revocation. This intelligent threat detection reduces reliance on static rules and enhances the ability to enforce Zero Trust policies effectively across complex cloud environments.

Blockchain Technology

Blockchain introduces a decentralized, tamper-resistant method for identity verification and transaction logging. By leveraging blockchain, Zero Trust architectures can benefit from immutable audit trails and enhanced trustworthiness of identity data. This can improve device authentication and authorization processes by ensuring that credentials and access permissions cannot be altered without detection, reinforcing the “never trust, always verify” principle.

Cloud-Native Security Tools and Automation

Emerging cloud-native tools provide automated policy enforcement, continuous monitoring, and integration with DevOps pipelines. Automation reduces manual overhead and human error, enabling Zero Trust models to scale efficiently within dynamic cloud environments where workloads and users are constantly changing.

Other Innovations

Technologies such as Secure Access Service Edge (SASE) and Confidential Computing further bolster Zero Trust by combining network security functions with cloud-native architectures and protecting data in use, respectively. These advances help create more resilient, flexible, and privacy-focused security infrastructures.

By integrating these emerging technologies, organizations can address Zero Trust’s core challenges, enhancing visibility, control, and trust verification across increasingly complex and distributed environments.

Related Resources

OT Security

Although the underlying Zero Trust principles are no different for operational technology (OT) and critical infrastructure compared to cloud and data centers, the implementation can be significantly different. OT workloads cannot accept the software agent needed to create an application perimeter. Instead, you need to define a Zero Trust DMZ to enforce access policies.

Manufacturing and critical infrastructure environments are highly sensitive to downtime, so it's important to be able to insert the Zero Trust DMZ while minimizing the impact on existing applications. Zentera helps customers implement a Zero Trust DMZ with the Zero Trust Gatekeeper—an appliance that deploys inline to protect resources and enforce Zero Trust access policies.

Government Interest

Governments worldwide have embraced Zero Trust.

In the US, the Biden administration's Executive Order 14028 was the catalyst for NIST SP 800-207 and OMB M-22-09, mandating all federal agencies to adopt Zero Trust by the end of 2024. It also lays the groundwork for the government to push Zero Trust requirements into private industry, starting with its contractors.

The US government is also using the power of the purse to spur change. The Cybersecurity and Infrastructure Security Agency (CISA) is preparing to formalize a Secure Software Development Attestation Form, meaning vendors of the software the US government procures will have to attest that they follow NIST SP 800-218 security practices. Zero Trust can help companies implement best practices, including segmenting build environments and controlling access to source code.

Related Resources

SASE Role

Secure Access Service Edge (SASE) proposes a new, cloud-based deployment model for security services that were previously deployed as on-premises components or are new for the cloud. It includes a basket of capabilities, including SWG, CASB, and SD-WAN.

SASE often includes ZTNA as well. SASE-delivered ZTNA may play a role in remote access for a Zero Trust project, but it cannot deliver on-prem ZTNA, so on-premises users and remote users will have different experiences. It also does not have a concept of an application perimeter, which would be needed for a NIST SP 800-207 Zero Trust Architecture.

For more information on SASE, check out our explainer article.

Tech Stack

Zero Trust isn’t something you can just declare. You need the right tech to back it up. That includes everything from identity and access tools to monitoring, automation, and threat detection. Here’s a breakdown of technology requirements by function:

1. Identity and Access Management (IAM)

- Identity Provider (IdP) (e.g., Azure AD, Okta, Ping Identity)

- Multi-Factor Authentication (MFA)

- Single Sign-On (SSO)

- Privileged Access Management (PAM)

2. Device Security and Posture

- Endpoint Detection and Response (EDR)

- Mobile Device Management (MDM)

- Device Compliance/Health Verification

- Host-Based Firewalls

3. Network and Application Access

- Software-Defined Perimeter (SDP)

- Secure Web Gateways (SWG)

- Zero Trust Network Access (ZTNA)

- DNS Filtering

- Virtual Private Network (VPN) Alternatives

4. Segmentation and Enforcement

- Microsegmentation Tools

- Network Access Control (NAC)

- Firewall as a Service (FWaaS)

- Policy Enforcement Points (PEPs)

5. Visibility and Monitoring

- Security Information and Event Management (SIEM)

- Network Traffic Analysis (NTA)

- User and Entity Behavior Analytics (UEBA)

- Centralized Logging and Dashboards

6. Threat Detection and Response

- Intrusion Detection/Prevention Systems (IDS/IPS)

- Threat Intelligence Platforms

- Security Orchestration, Automation, and Response (SOAR)

- Incident Response Tools

7. Automation and Orchestration

- Policy Management Automation

- Infrastructure as Code (IaC)

- Workflow Automation Tools

- Integration APIs and Connectors

Implementation

Before beginning implementation, it’s important to assess your current environment’s readiness for Zero Trust. A structured framework—like the one outlined in NIST SP 800-207—can help you evaluate critical areas such as identity management, device posture, application architecture, and existing network segmentation. By understanding where you stand, you can better prioritize initiatives and identify any gaps that must be addressed early.

Practical resource allocation and project planning are key to a smooth Zero Trust journey. Start by assessing your available team capacity, budget, and tools to prioritize initiatives that deliver the most immediate value. Break down the overall transformation into manageable projects with clear milestones and realistic timelines, allowing for adjustments as you learn and grow. Ensure you allocate time not only for technical deployment but also for training, policy refinement, and ongoing management. This structured approach helps balance progress with your organization’s operational needs and keeps momentum steady without overwhelming your resources.

A phased implementation roadmap can make the transformation more manageable. Start by identifying high-value applications or vulnerable resources to protect first. Then, roll out Zero Trust controls in phases—such as enforcing access policies for remote users, followed by segmenting east-west traffic, and finally extending protections to hybrid or OT environments. Each milestone should be followed by a review to optimize controls and plan the next phase.

Transitioning to Zero Trust one resource and use case at a time yields the best results.

Zero Trust Implementation Checklist:

Preparation and Assessment

- Inventory all assets, including VMs, bare-metal servers, cloud workloads, and OT devices.

- Map the current network architecture, including segmentation and existing controls.

- Evaluate identity and access management capabilities and gaps.

- Assess device posture management. Are all devices monitored and compliant?

- Review the application landscape. Which apps require Zero Trust controls first?

- Identify regulatory or compliance requirements (e.g., federal encryption standards).

- Gauge team capacity, budget, and existing tools to support transformation.

Planning and Prioritization

- Define clear goals and success metrics for each implementation phase.

- Prioritize high-value or high-risk resources for early Zero Trust enforcement.

- Develop a phased rollout plan with milestones and timelines.

- Allocate resources for training, policy development, and ongoing management.

- Decide on a single integrated platform versus best-of-breed solutions.

Implementation and Deployment

- Enforce strict identity verification and least-privilege access policies.

- Segment networks to minimize lateral movement and isolate sensitive zones.

- Implement continuous monitoring and real-time policy enforcement at the session layer.

- Enable encryption for all traffic, including LAN segments if applicable.

- Set up audit logging and telemetry for forensic analysis and policy refinement.

Test policies in controlled environments before wide deployment.

Ongoing Optimization

- Review each phase’s results to identify successes and areas for improvement.

- Use audit trails to investigate anomalies and update policies accordingly.

- Train teams regularly on evolving Zero Trust concepts and tools.

- Adjust resource allocation based on feedback and changing priorities.

- Stay current with Zero Trust standards and best practices (e.g., NIST updates).

Related Resources

Experience Zero Trust, Simplified

See how the CoIP Platform addresses key access security challenges. Our Zero Trust solutions architect will demonstrate how to strengthen against ransomware and insider threats, provide secure direct access without VPNs, and seamlessly integrate cloud and on-premises resources. Fill out the form below to schedule your live demo today!