Zero Trust Security for Hybrid Kafka with CoIP Platform

Apache Kafka is a popular open-source distributed streaming platform used to publish, subscribe, store and process streams of records, and is used to build real-time streaming data pipelines and applications.

Kafka works quite well and has good performance, and has options for authenticated and secure transport via TLS and SASL. However, the deployment model requires direct connections between the Kafka brokers and Zookeeper nodes. This either requires setting up a VPN, which can require the support of the networking team; as applications migrate to the cloud, it's increasingly important to maintain agility to satisfy business needs. It's also possible to configure brokers with public IPs, but that introduces other security considerations.

Zentera’s CoIP Platform implements a network overlay that quickly and securely connects LAN/WAN applications with Zero Trust application and network security. This model enables a complex hybrid Kafka application to be connected, even across environments where there is no inbound access on either side, or in an application intended to deploy across organizations – for example, between an enterprise and a vendor network.

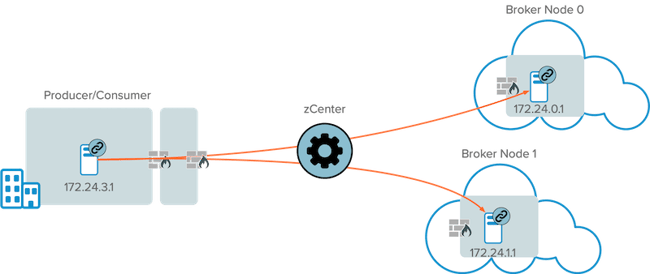

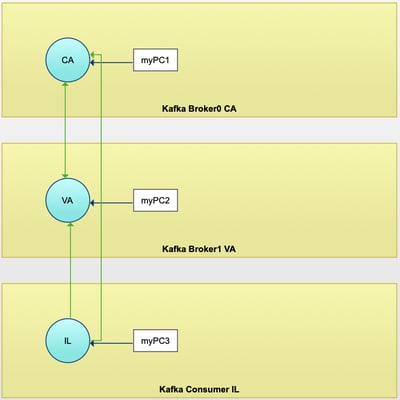

This tutorial shows how applications teams can quickly set up a Zero Trust minimal connection for Kafka brokers, producers, and consumers over a distributed hybrid environment without building a VPN connection. We'll use the example configuration shown below, with a producer/consumer connecting to brokers across the CoIP Overlay.

| Note: the example IP addresses shown above are CoIP overlay addresses, not the physical IP addresses of the hosts. This tutorial assumes that all 3 machines have already been onboarded to the CoIP Platform by installing the zLink agent. |

| Note:The Zentera zCenter can be spun up in the cloud, using the trial on the AWS Marketplace. |

Kafka Configuration

We will first start by setting up typical broker configuration.

- Download Kafka 2.12 (on all three nodes) and un-tar the package.

- On Broker nodes, edit properties under /bin/ and make the following 3 changes:

- Set Broker IDs as follows:

- For Broker 0, set id=0

- For Broker 1, set id=1

- Set brokers to listen on port 9092

- listeners=PLAINTEXT://0.0.0.0:9092

Note: although Kafka supports encrypted transport, we will not be using it for this demo as CoIP Platform already provides secure TLS 1.3 tunnels between the hosts

- listeners=PLAINTEXT://0.0.0.0:9092

- Set the hostname and port the Brokers will advertise to producers as follows

- For Broker 0: listeners=PLAINTEXT://172.24.0.1:9092

- For Broker 1: listeners=PLAINTEXT://172.24.1.1:9092

Note: we are advertising the CoIP addresses of the hosts, not the physical IP addresses, as that is the address the remote producer/consumer will use to access the brokers.

- Start Kafka and zookeeper servers on both Brokers running the following command using the script in /bin/

./kafka-server-start.sh ../config/server.properties

./zookeeper-server-start.sh ../config/zookeeper.properties

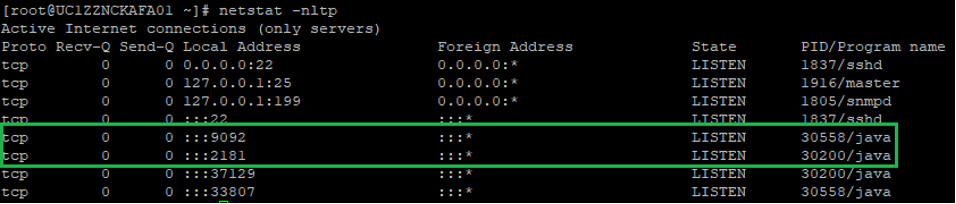

When properly started, you should see the broker listening on ports 9092 and Zookeeper on 2181:

- Create a Topic. A topic is a category or feed name to which records are published; here, we are creating a topic called "test" on the broker.

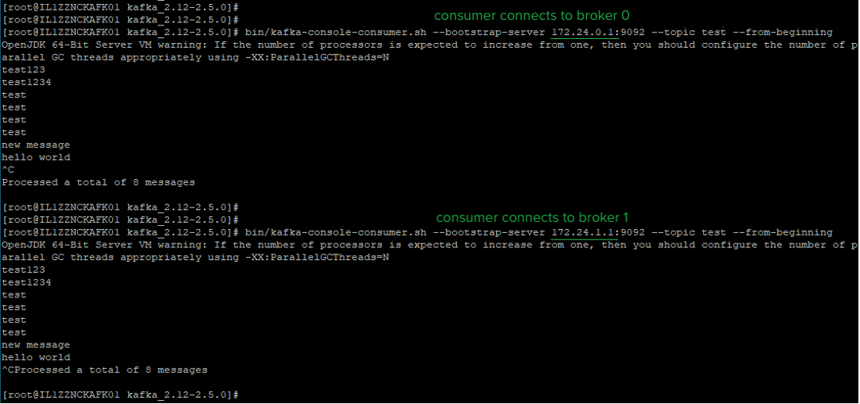

./kafka-console-consumer.sh --bootstrap-server 172.24.0.1:9092 --topic test --from-beginning

At this point, the brokers have been set up to listen to inbound port 9092, but the consumer has no route to it (yet). We'll set that up in the next step.

CoIP Platform Endpoint Onboarding and Access Policy Configuration

The next steps configure the communications through the overlay for producer/consumer to be able to reach the brokers, and is reviewed at a high level; for detail on each step, please refer to the CoIP Platform Administration Guide.

Configuring the Application Profile

An Application Profile describes the application topology and allows us to specify access connections amongst the different components. As you can see, the 2 brokers and the producer/consumer are all in separate, disconnected routable domains.

We set up a full mesh of connections between those servers, as represented by the green arrows in the Application Profile diagram. We used bidirectional CoIP WAN connections between the two brokers, so that either broker can initiate communications with the other. We then used a unidirectional CoIP WAN connection from the consumer to the two brokers, so that traffic originated by the broker to the consumer would be rejected.

Turning on CoIP Chamber

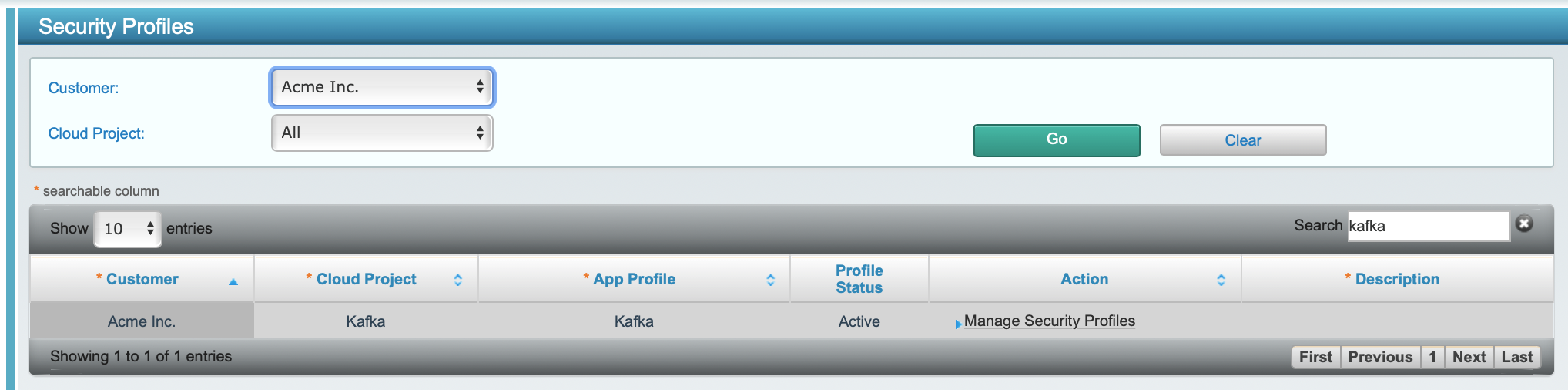

We then configured the CoIP Chamber to Prevention mode on each server group on the zCenter portal, from Project Management > Security Profiles, selecting the project, and then clicking on 'Manage Security Profiles'.

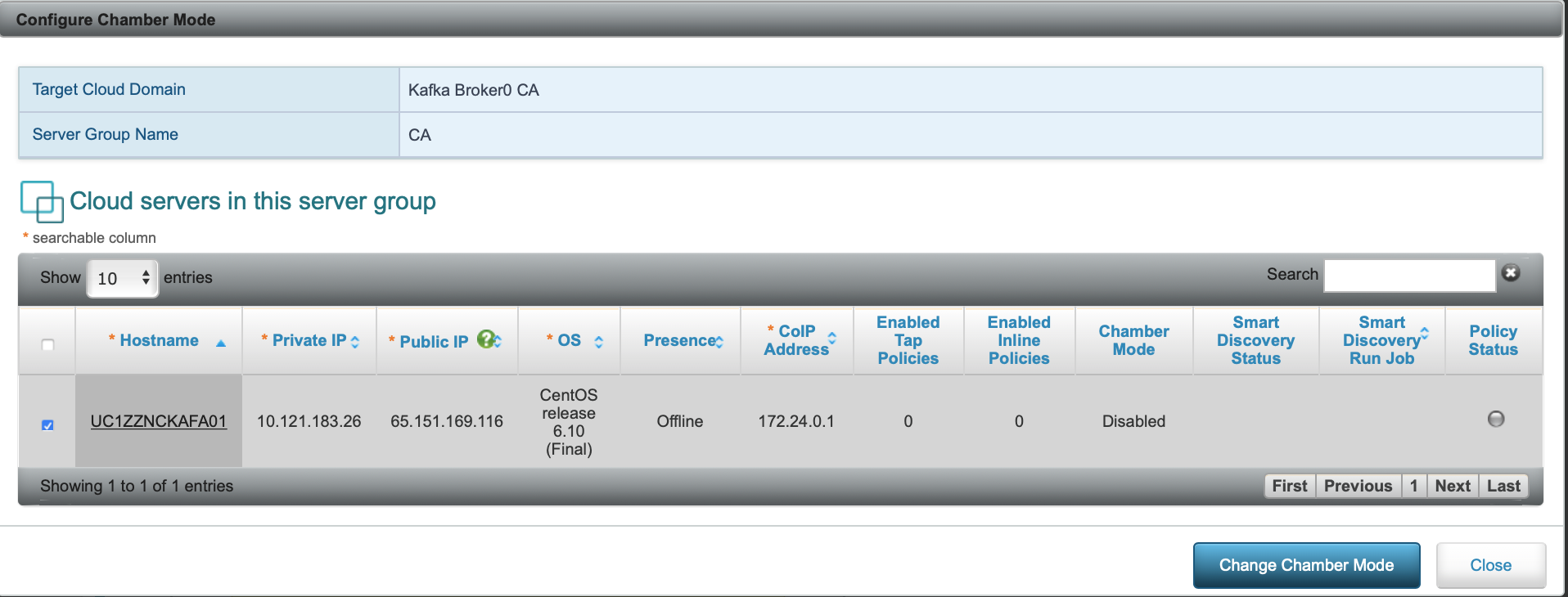

Once in the security profile, click on a server group; since the servers are already onboarded, Change Mode for Individual Cloud Servers to change the server to Protection mode:

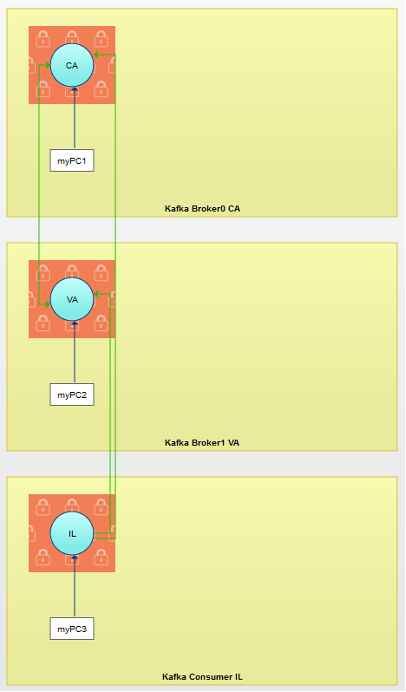

After making the changes, our application profile should now look like this:

The effect of turning on Prevention mode can be seen with the new red box surrounding each of the server groups, signifying that the only way in or out of those servers is now the CoIP Overlay connections. Note that you'll want to create an escape for your local network, which is shown above with the IP Component connections (myPC1, myPC2, and myPC3).

Turning on Prevention mode also enables Security Filters, which we'll configure next. Since we haven't yet configured them, at this point, all nodes can communicate with each other using all applications and ports, within the directionality constraints described above.

Setting up Security Filters

In this step, we'll use the granular security filters in the CoIP SASE Overlay to further restrict the CoIP WAN connections to a set of specific ports / and application binaries.

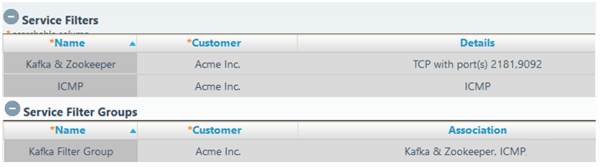

In the zCenter portal, from Security Rule Provisioning > Service Filter Groups, create the following service filters:

This creates service objects associating the Kafka service with TCP 2181 (Zookeeper) and 9092 (Kafka). We also create a service object with ICMP for convenience (so that we can ping between the machines), and then group both together in the filter group Kafka Filter Group to enable us to quickly add those filters to our CoIP WAN connections.

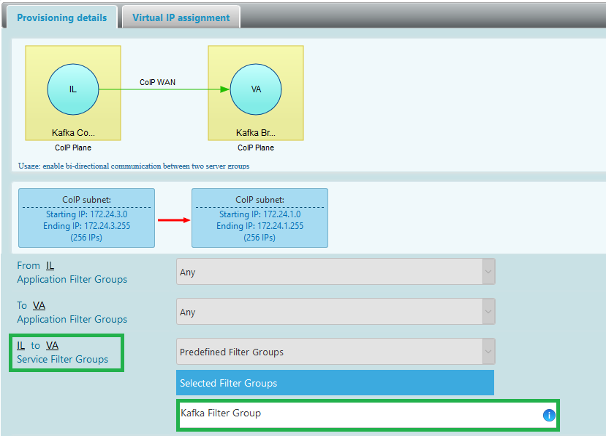

Go back to the security profile to make changes to the application profile's security settings. Clicking on the green CoIP WAN connection brings up a dialog allowing us to apply the service filter group to the CoIP WAN connections, as shown below, on the CoIP WAN from the consumer to a broker:

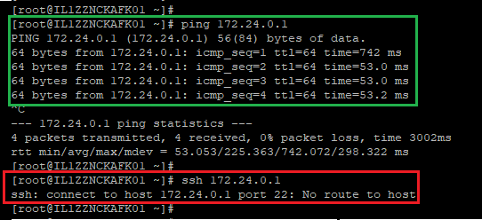

After saving those changes, the consumer is now restricted to using TCP port 2181 and 9092 to connect to the brokers:

Note that ping works, because we specified ICMP as part of the Kafka Filter Group security filter group, but ssh cannot connect:

These settings lock the overlay connection to minimize the access provided between the consumer and brokers.

Extending the setup

We can quickly add new producers/consumers in different sites to this setup, simply by onboarding them to CoIP and adding them to the consumer server group in the application profile. Regardless of where they are, consistent security policies will be applied across all of them. We can also use the server group micro-segmentation controls to prevent the consumers from using the overlay to communicate with each other.

Conclusion

With simple, pure-software steps, and not touching any underlying network infrastructure or perimeter security appliances, we have quickly set up a multi-site, hybrid cloud, secure connections for the Kafka application. The granular security controls also allowed us to minimize any lateral attack surface exposure, by narrowly limiting access to those secure connections.